Article Contents

Onscreen evaluation system provides many advantages

- Ability to generate results in a quick time with accuracy.

- Digital management and storage of answer sheets

- Easy retrieval of the answer sheets any time from any location

- Examiners can evaluate digital copies of the answer sheets in quick time

- The accuracy of evaluation improves significantly.

However, any technology implementation usually depends on the participation of its stakeholders and the proper training practices adopted by the university. Lack of training or hands-on exposure to the onscreen evaluation system can hamper progress and overall evaluation process for the educational institute or university.

Following are some of the key mistakes which should be avoided if you wish to make onscreen evaluation implementation a success for your education institution.

1. Not Implementing pilot phase

For any system implementation, it should be adopted in a phased manner after taking continuous feedback from the stakeholders. The pilot phase is the key phase that can help institutes to adopt a new system. The pilot phase allows examiners to have a mock evaluation process.

The pilot phase can be monitored by platform technology experts along with key stakeholders of the education institution including controller of examination, registrar.

There are institutions who adopted an onscreen evaluation system in a hurry without having any testing or pilot phase.

It has resulted in errors during the answer sheet evaluation phase.

Acceptability of the new system goes down if you skip the pilot phase to get essential feedback about overall system functionality.

Onscreen evaluation platform of Splashgain follows best practices and the pilot phase is one of the essential phases of implementation of the entire process.

The pilot phase has helped many universities, autonomous institutes to get hands-on experience of the system and it resulted in the successful adoption of the system by various evaluators and faculty members of the education institution.

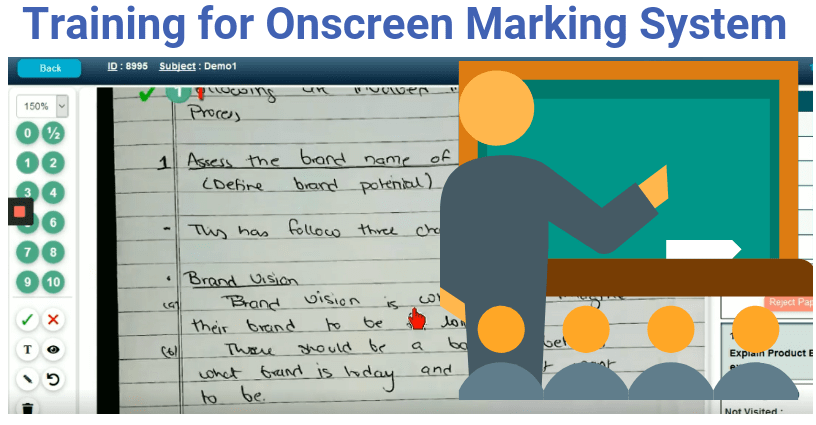

2. Not Providing Enough hands-on training to evaluators/ Moderators

In addition to the pilot phase having training material along with live training sessions about how to use the onscreen evaluation process is also critical for the overall success of the process.

Before any examiner starts the evaluation process there should be a hands-on training session that should be conducted. Without such training sessions, examiners would not be able to understand the functionality and usability of the system. It can hamper the successful adoption and implementation of the onscreen evaluation process.

There are universities that tried to implement an onscreen evaluation system on a large scale.

It failed to provide proper hands-on training to each evaluator or moderator.

Such things increase the resistance to using the new system. Teachers, Professors can oppose the adoption of the new system due to lack of training.

The onscreen evaluation platform of Splashgain provided live class-oriented training sessions to each evaluator. There are specific training videos, help documents available. It helps each evaluator to understand the entire system with ease.

The onscreen evaluation system can cut the short time required to evaluate a particular answer sheet. It can help to evaluate individual answer sheets within 4 to 5 minutes of time. Proper training can make this possibility. If evaluators are not trained properly and they are not aware of usage and features of the system then adoption of this system would be difficult.

3. Not Providing Support during evaluation Phase

During the evaluation of the answer sheet, the evaluator may face technical or functional difficulty. In such a case, proper help or support should be available to resolve queries in order to have a smooth execution of answer sheet evaluation phase.

If your system fails to provide the right kind of support on a timely basis then frustration or dissatisfaction increases among professors or evaluators and it can add to delay in result processing.

If your university is adopting an onscreen evaluation system on a large scale where hundreds of evaluators are going to do a digital evaluation of answer sheets then it is essential to have a support system in the form of live chat, helpline phone, email support to resolve queries of the evaluators.

The onscreen evaluation platform of Splashgain provides extensive and dedicated support during the evaluation phase. There are dedicated helpline numbers, instant email support, live chat support provided to resolve queries arising during the evaluation phase.

4.Not keeping track on Online Storage of Answer Sheet Digital Copies

Digital onscreen marking helps to simplify result processing. It can help you to declare results in quick time. However, as a process of audit, it is important to store digital copies of the answer sheets for a specific period of 3 to 5 years as per norms set by the University Grant Commission or relevant entity of your education institution.

There should be a proper mechanism to store evaluated copies insecure way and you should be able to retrieve any copy in the future as per requirement. Many entities have failed to define the proper processes for the storage of digital copies of the answer sheets. They even failed to have a backup of the historical digital copies of the answer sheet.

It can result in process noncompliance and can hamper the brand value of the university. Students may file RTI for retrieval of the evaluated answer sheet. In such a case there should be the proper way to get a historical digital copy of the answer sheet and it should be issued to the student as per request.

Splashgain maintains all historical answer sheets in secure blob storage with an additional backup/ failover mechanism. It helps education institutions to retrieve any answer sheet using just the admin panel and suitable credentials.

5. Not taking Feedback from Examiners, Moderators, and Controller of Examiner

Successful adoption or execution of any system depends on the feedback mechanism. If you consider any online service providers then it can be observed that it continuously tries to get feedback from the user. Such a feedback mechanism is helpful to improve usability, the functionality of the system.

The success of Onscreen evaluation system depends on feedback provided by professors, evaluators, moderators along with senior members of the educational institution or university.

Many education institutions adopted onscreen evaluation system where feedback mechanism was missing. It could provide to be a hindrance to the successful adoption of the system across education institution and you may not be able to derive all the benefits of the platform.

Conclusion

The success of any new system implementation depends on active usage and participation from its stakeholders. Continuous feedback and improvement loop helps to take the system to the next level.

Onscreen evaluation system is helping universities and education institutes to simplify the result processing mechanism. There are some of the universities that are able to cut short result processing time from 45 days to just 8 days. The entire logistical activity and coordination process has simplified and it has resulted in cost saving for the institution. If you avoid those 5 mistakes mentioned in the article then successful adoption of an onscreen evaluation system would be a reality for your education institute.

Have you faced any other challenges during the onscreen evaluation implementation process? Please share it with us.

![How Government-Led Exams at 250+ Locations Are Setting New Standards of Integrity [Case Study]](https://www.eklavvya.com/blog/wp-content/uploads/2024/04/Enhancing-Exam-Integrity-Government-Certification-in-250-Locations-150x150.webp)

![Transforming Central Govt. Exams Evaluation: How Onscreen Marking is Leading the Charge [Case Study]](https://www.eklavvya.com/blog/wp-content/uploads/2024/04/How-Onscreen-Marking-Revolutionized-Central-Govt-Exams-Case-Study-1-150x150.webp)

![How Onscreen Marking Revolutionized Central Govt Exams [Case Study]](https://www.eklavvya.com/blog/wp-content/uploads/2024/04/How-Onscreen-Marking-Revolutionized-Central-Govt-Exams-Case-Study-1-300x300.webp)